Learn how to generate robots txt file to control crawlers and boost technical SEO with a practical, hands-on guide.

January 11, 2026 (1mo ago)

Master SEO: generate robots txt file with this quick guide

Learn how to generate robots txt file to control crawlers and boost technical SEO with a practical, hands-on guide.

← Back to blog

Generate robots.txt: Quick SEO Guide

Summary: Learn how to create and deploy a robots.txt file to control crawlers, protect content, and optimize crawl budget with practical examples.

Introduction

A well-crafted robots.txt file gives you control over which crawlers can access your site, helps protect valuable content, and focuses crawl budget on the pages that matter. This guide shows clear, practical steps for creating, testing, and updating your robots.txt so search engines and polite bots behave the way you want them to.

To get started

Create a plain text file named robots.txt, add directives like User-agent: and Disallow:, and upload it to your site’s root at https://yourdomain.com/robots.txt. That single file acts as a simple instruction set for search engine crawlers and other bots.

Why your robots.txt file is a critical SEO tool

Messing with robots.txt can feel technical, but it’s your website’s gatekeeper. Used correctly, it preserves server resources and directs crawlers toward high-value pages—improving indexation and ranking. The Robots Exclusion Protocol dates back to the early web, and its role has evolved into a key piece of site infrastructure.1

A focused robots.txt helps you steer crawlers to the pages that bring traffic, leads, and revenue while keeping them away from areas that waste crawl budget, such as admin pages, internal search results, and duplicate print-friendly versions of pages.

A robots.txt file is more than an exclusion list; it’s a strategic guide for search engines. It tells them where to spend their limited time on your site, directly influencing which pages get indexed and ranked.

Ultimately, getting robots.txt right means better SEO performance, preserved server resources, and clearer discovery for your most valuable content.

Understanding the language of web crawlers

Robots.txt is a short conversation with crawlers. The file uses simple directives to indicate which doors are open or closed. These commands are straightforward and form the foundation of a healthy technical SEO strategy.

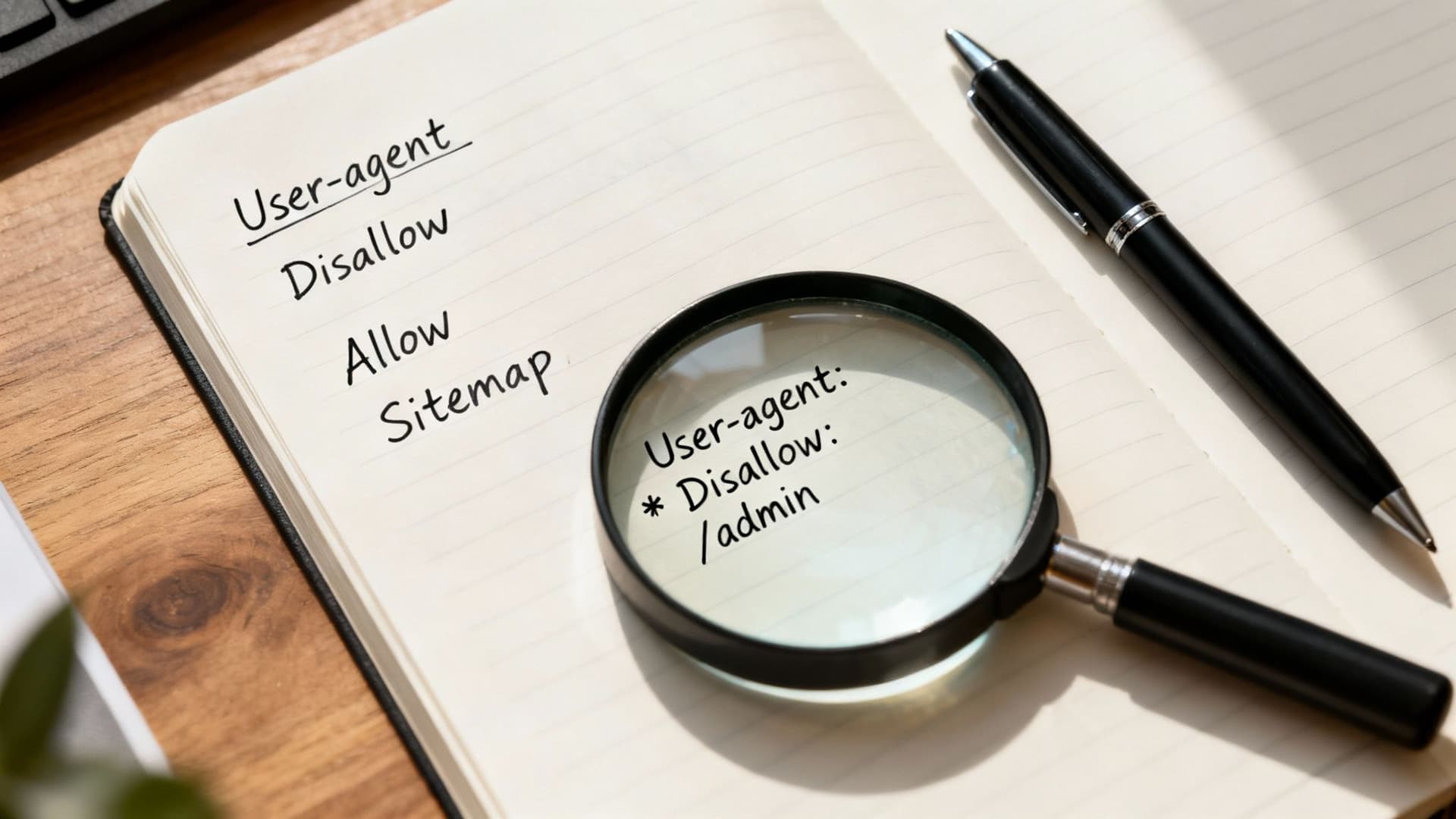

Core robots.txt directives explained

| Directive | What it does | When to use it |

|---|---|---|

| User-agent | Specifies which crawler the rules apply to. * is a wildcard for all bots. | Use User-agent: * for general rules, or specify Googlebot or Bingbot for targeted instructions. |

| Disallow | Tells bots not to crawl a specific file or directory. | Block admin login pages (/wp-admin/), internal search results, or unhelpful thank-you pages. |

| Allow | Overrides a Disallow for a specific subfolder or file. | Allow a single file inside a disallowed directory. |

| Sitemap | Points crawlers to your sitemap XML file so they can discover important pages. | Always include this to speed discovery of pages you want indexed. |

These simple commands give you strong control over crawler behavior. Mastering them helps you create an effective robots.txt file.

Putting directives into practice

If you have an important interactive tool—say, a lead-generating calculator—you want search engines to crawl and index it. At the same time, you’ll want to block pages that don’t add SEO value. A common, practical robots.txt looks like this:

User-agent: *

Disallow: /wp-admin/

Disallow: /private-files/

Disallow: /cgi-bin/

Sitemap: https://yourdomain.com/sitemap.xml

This setup uses User-agent: * to address all bots, blocks a few common non-public folders, and points crawlers to the sitemap. It’s clean and efficient.

To learn more about how crawlers behave, analyze server logs and visitor data. Once you understand which bots are hitting your site, you can craft rules that support your business goals.

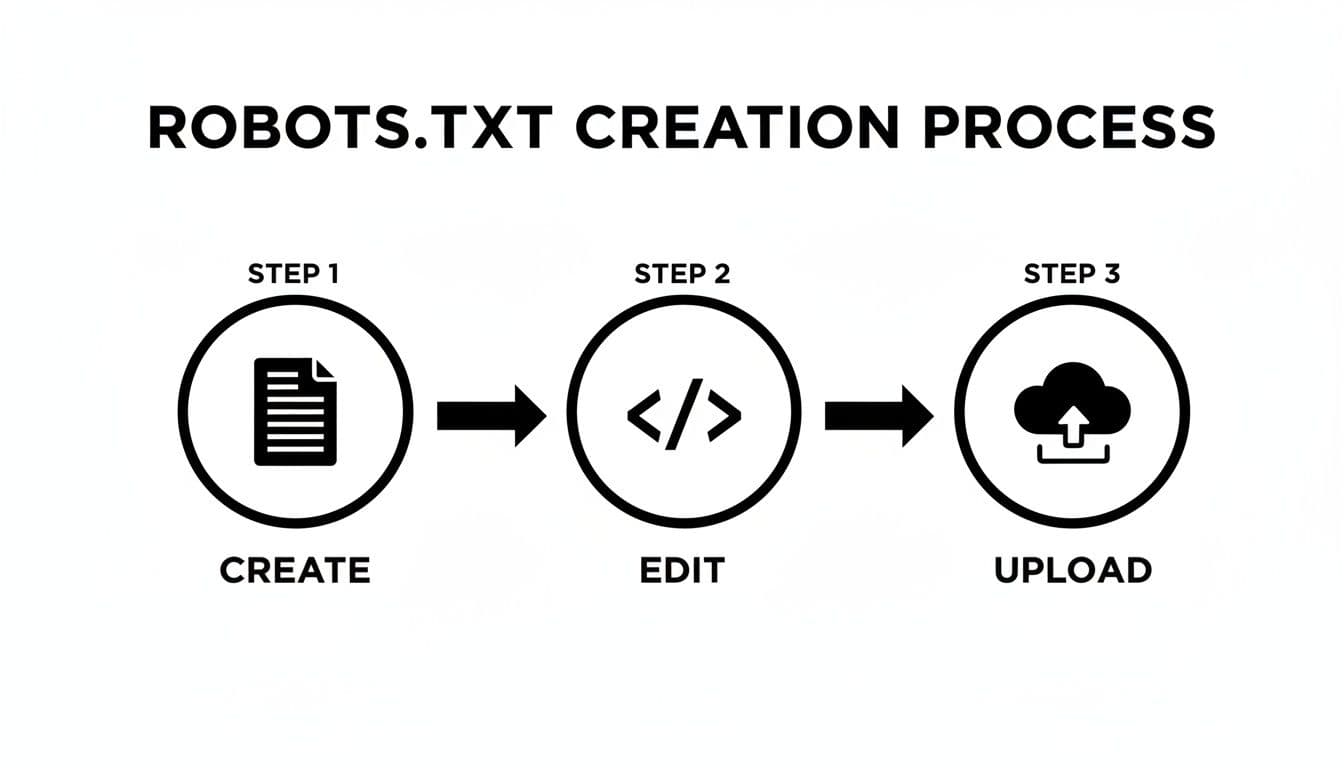

A practical guide to creating your robots.txt file manually

Sometimes you should create the file by hand. Use Notepad or TextEdit, save the file as plain text named robots.txt (lowercase), and upload it to your site root via FTP or your host file manager. If you save it as .docx or .rtf, crawlers will ignore it.

Place it at https://yourdomain.com/robots.txt. Uploading it to a subfolder, like /blog/robots.txt, makes it invisible to crawlers.

Crafting rules for real-world business goals

Example: a financial services site with a lead-generating Mortgage Calculator. You want that tool indexed, but you don’t want admin areas or thank-you pages in search results. Set your rules like this:

User-agent: *

Allow: /

Disallow: /admin/

Disallow: /thank-you-for-your-submission/

Sitemap: https://www.yourfinancialsite.com/sitemap.xml

This tells all crawlers they can crawl the site except /admin/ and the thank-you page, and points them to a sitemap. Include a sitemap line like this to help crawlers find deep or important pages faster.

Note: Order matters in more complex files. For overlapping

AllowandDisallowrules, the most specific rule should come first for Googlebot, or you risk unintentionally blocking content.

Creating and testing your robots.txt is a core step in any technical SEO audit.

Managing the new wave of AI and LLM crawlers

The crawler landscape now includes AI-specific bots such as GPTBot and Common Crawl. These crawlers have grown quickly, and many sites are adjusting rules to address them. Polite AI crawlers will obey robots.txt, but malicious scrapers may not.2

Why you might block AI crawlers

Blocking AI crawlers can protect original content from being scraped and used for model training without attribution or compensation. If you’ve built a unique online tool, you may not want its logic or data harvested by third parties. Weigh the benefits of visibility on AI-driven platforms against the risk of your content being reused.

Analyzing log files helps you see which bots visit your site and informs whether to block them.3

How to block common AI bots

Add specific User-agent lines for each crawler you want to block. Examples include GPTBot, CCBot, Google-Extended, and Anthropic-AI. To block a bot entirely:

User-agent: GPTBot

Disallow: /

User-agent: CCBot

Disallow: /

User-agent: Google-Extended

Disallow: /

Remember, robots.txt is an honor system. Reputable crawlers will respect your rules, but malicious actors may ignore them. For stronger protection, consider a Web Application Firewall (WAF) or a dedicated bot management solution.

Avoiding common and costly robots.txt mistakes

A single misplaced line can accidentally de-index your whole site. The classic error is Disallow: /, which tells all crawlers not to crawl any pages. If your site suddenly disappears from search results, check robots.txt first.

Blocking essential files

Blocking CSS or JavaScript can prevent Google from rendering pages correctly, which hurts SEO. For example, don’t block /assets/js/ if your site relies on JavaScript to load important content. Instead use Allow: for those resources when needed:

- Before (incorrect):

Disallow: /assets/js/ - After (correct):

Allow: /assets/js/

Conflicting rules and syntax errors

Rules are read top to bottom, and more specific rules should come before broader ones. If you want to block a /private/ folder but allow a single PDF inside it, put the Allow rule first for Googlebot:

Allow: /private/public-report.pdf

Disallow: /private/

Also watch for typos. A misspelled directive like "dissallow" is ignored. Use Google Search Console’s robots.txt Tester before deploying changes.

Remember, robots.txt is a polite request, not a guarantee. Many major publishers are blocking AI training bots, but determined scrapers can ignore your file entirely.4

Common questions about robots.txt files

Robots.txt vs. noindex: what’s the difference?

Robots.txt stops crawling, while a noindex tag stops indexing. Use robots.txt to prevent crawlers from visiting sections like admin pages; use noindex on pages you want crawled but not shown in search results, such as temporary landing pages.

Should I add my sitemap to my robots.txt file?

Yes. Adding Sitemap: https://www.yourdomain.com/sitemap.xml helps crawlers discover and index deep-linked, high-value content more quickly. If you use multiple sitemaps, add one Sitemap: line per file.

Can robots.txt stop bad bots and scrapers?

Robots.txt helps but isn’t foolproof. It’s effective against well-behaved crawlers, but malicious scrapers often ignore it. For robust protection, combine robots.txt with server-level controls, a WAF, and bot management tools.

Ready to boost your site’s engagement and SEO with interactive tools? With MicroEstimates, you can build and embed custom calculators on your site. Try these tools to support lead generation and user engagement:

Quick Q&A — common reader questions

What’s the simplest robots.txt for most sites?

Start with:

User-agent: *

Disallow: /wp-admin/

Sitemap: https://yourdomain.com/sitemap.xml

How do I block GPTBot or other AI crawlers?

Add a User-agent directive for each crawler and set Disallow: / beneath it.

How do I test my robots.txt before deploying?

Use Google Search Console’s robots.txt Tester and check server logs to confirm the behavior you expect.

Ready to Build Your Own Tools for Free?

Join hundreds of businesses already using custom estimation tools to increase profits and win more clients