Unlock powerful SEO and performance insights by analyzing log files. This guide shows you how to find crawl budget waste, fix errors, and boost your rankings.

December 24, 2025 (2mo ago)

A Practical Guide to Analyzing Log Files for SEO

Unlock powerful SEO and performance insights by analyzing log files. This guide shows you how to find crawl budget waste, fix errors, and boost your rankings.

← Back to blog

A Practical Guide to Analyzing Log Files for SEO

Summary: Unlock powerful SEO and performance insights by analyzing log files. This guide shows you how to find crawl budget waste, fix errors, and boost your rankings.

Introduction

Let’s be honest: most people treat log files like a wall of technical gibberish best left to the IT team. But those files contain the ground truth about your website’s health and how search engines truly see it. Unlike polished analytics reports, server logs give a raw, unfiltered record of every request to your server — including visits from crawlers like Googlebot. Use them and you move from guessing to knowing.

Why Log File Analysis Is Your SEO Secret Weapon

A tool like Google Analytics shows how users behave after they land on your site. Log files show how search engines discover and evaluate your site in the first place. That difference is critical: if bots can’t find or efficiently crawl your key pages, your target audience never gets the chance to see them. By digging into logs, you’re looking at data many competitors ignore.

Uncover What’s Really Happening Behind the Scenes

Log analysis replaces assumptions with timestamps and facts. Instead of wondering whether Google crawled your new post, you can see the exact visit time and frequency. That direct insight lets you spot issues before they tank rankings and find optimization opportunities hiding in plain sight.

Here are a few outcomes you can expect:

- Pinpoint crawl budget waste: Are bots spending time on low-value URLs, parameter-heavy pages, or redirect chains that stop them from reaching your important content?

- Find “invisible” errors: Unearth 4xx and 5xx server errors users never report but that damage SEO and UX.

- Verify technical SEO fixes: Confirm whether a migration, sitemap change, or robots update took effect.

- Understand crawler behavior: See which site sections are crawled most frequently — a clue to what search engines deem important.

Log files are an objective record of reality: not a sample, but the complete story of every request your server handled. That gives you undeniable evidence to build a smarter SEO strategy.

Key Insights You Can Uncover from Log Files

| Data Type | Potential Insights and Business Value |

|---|---|

| Crawl Frequency | Discover which pages crawlers consider important. High crawl rates on key pages are good; high rates on low-value pages signal wasted crawl budget. |

| Status Codes | Identify critical issues like 404 (Not Found) or 503 (Service Unavailable) errors that prevent indexing and harm UX. |

| Bot Identification | Verify that you’re being crawled by legitimate bots (like Googlebot) and not scrapers or malicious agents. |

| Crawl Delays | Pinpoint slow-loading pages that frustrate users and crawlers, which can lead to lower rankings. |

| URL Discovery | See exactly which URLs bots find and crawl, including old redirects or pages you thought were gone. |

This direct visibility gives you a clear view of the technical foundation beneath your SEO performance.

The importance of this is growing: the global log management market is projected to expand significantly over the next few years1, making log analysis a strategic capability, not just a niche task.

Bringing Your Scattered Log Data Together

First step: find your logs. In real-world setups they’re rarely in one tidy folder — they’re spread across web servers, app instances, CDNs, firewalls, and cloud services. Analyzing them server-by-server makes it impossible to spot sitewide trends. The absolute first step is to centralize that data into a single location.

This turns disparate text files into a single source of truth. From there you can spot crawl anomalies and drive real SEO growth.

Finding Your Core Log Files

Common sources include:

- Nginx: typically in /var/log/nginx/access.log and /var/log/nginx/error.log

- Apache: often in /var/log/apache2/ (Debian) or /var/log/httpd/ (Red Hat)

Modern stacks add CDN, WAF, load balancer, and application logs. Each source can have a different format and location, which is exactly why centralized logging is non-negotiable.

Centralizing for Clarity and Power

Centralized logging uses a lightweight shipper or agent (e.g., Filebeat) to forward log lines to a central storage and analysis system. Open-source options like the ELK Stack are popular for self-hosting, and many cloud services provide managed alternatives. Centralization lets you run queries across your entire infrastructure and trace full request journeys from CDN to application server.2

This unified view is a game-changer for spotting issues. A sudden spike in 404s across multiple servers points to a sitewide deployment problem — something you’d miss by inspecting isolated files.

Overcoming Real-World Collection Challenges

Common hurdles:

- Mismatched formats across sources

- High data volume (gigabytes or terabytes per day)

- Security and privacy concerns in log contents

Don’t just collect raw files; build a pipeline to collect, process, and secure your logs so the data becomes reliable and usable.

Turning Raw Logs into Structured Insights

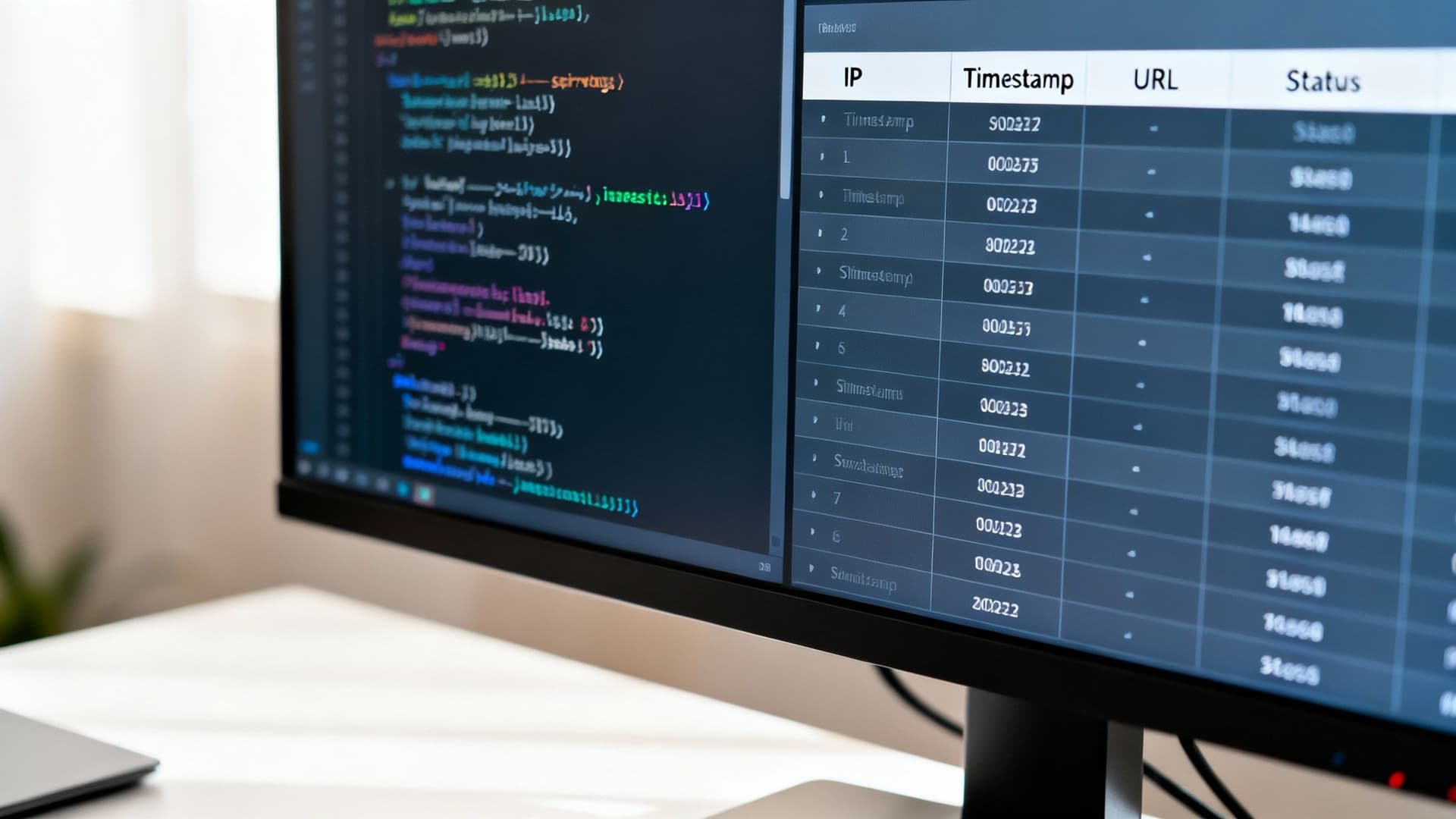

With all logs centralized, the next step is parsing: transforming raw lines into named fields like ip, timestamp, request_url, status_code, and user_agent. Parsing turns noise into a signal you can query.

The Power of Parsing

Once parsed, you can filter for things like user_agent = “Googlebot” or request_url contains “/products/”. Parsing enables dashboards, charts, and reports your marketing and engineering teams actually use.

Breaking Down Logs with Regular Expressions

Regex is the common tool for parsing. For example, an Nginx entry like:

192.168.1.1 - - [10/Oct/2023:13:55:36 +0000] "GET /products/widget HTTP/1.1" 200 1234 "https://example.com/" "Mozilla/5.0..."

can be parsed into IP, timestamp, request, status, bytes, referrer, and user agent. Many platforms provide reusable patterns (e.g., Grok) to speed this up and avoid reinventing the wheel.

Automating Parsing with Tools and Patterns

Modern log platforms include pre-built patterns for common formats, removing much of the regex burden. Once structured, your logs become a queryable dataset you can use for process mining, crawl analysis, performance troubleshooting, and more.

Finding Actionable SEO and Performance Insights

With centralized, structured logs, you can move from technical setup to real answers. Logs are the ultimate source of truth for how crawlers and users interact with your website.

Uncovering Crawl Budget Waste

Crawl budget is finite. If bots expend requests on low-value pages, your important pages suffer. Use logs to answer:

- Which URLs are most frequently crawled by Googlebot?

- How many 404s is a crawler hitting?

- Are bots trapped in redirect chains?

Fixing wasteful patterns improves indexing for money-making pages and drives organic traffic.

Pinpointing Performance Bottlenecks

Logs reveal slow endpoints via a time-taken field or similar. Filter for high-latency requests to find slow pages or API calls. If checkout steps are slow, that’s an immediate priority for engineering. Persistent slow responses are a strong signal you may need infrastructure upgrades; log evidence helps build the business case for investment.

Validating Your Technical SEO Efforts

After a robots.txt change, sitemap update, or migration, confirm outcomes in logs. Did crawlers stop requesting disallowed URLs? Are new sections being visited? This feedback loop turns assumptions into facts.

Enterprise adoption of log analysis tools is high — platforms like Splunk and Elastic dominate because logs are crucial for operations, security, and performance34.

Moving Beyond Manual Analysis: Dashboards and Alerts

Manual log inspection is reactive and slow. Build dashboards and alerts so problems surface in real time and your team acts before customers notice.

Building Early-Warning Dashboards

Start with a few essential visualizations:

- Status code trends (2xx, 3xx, 4xx, 5xx over time)

- Googlebot crawl activity (requests per hour/day)

- Slowest responding pages (top 10 by average response time)

Dashboards stop you from hunting for anomalies; they let the data surface them.

Setting Up Proactive Alerts

Create alert rules that notify teams when key metrics cross thresholds. Examples:

- IF 500-level errors > 50 in 5 minutes, THEN post to #dev-alerts

- IF Googlebot requests drop by 80% day-over-day, THEN notify SEO

Alerts let you respond in minutes, not hours or days, saving time and reducing impact on customers.

Managing Log Costs and Performance at Scale

High traffic turns log volume from megabytes into terabytes. Storage and processing costs can balloon if you index everything. Smarter collection policies control costs without losing essential insights.

Smart Strategies for Log Reduction

- Filter out noisy, low-value entries at the shipper (e.g., repetitive CDN health checks)

- Sample non-critical logs (keep a representative subset of debug/info events)

- Enforce data retention tiers: keep hot, searchable logs for 30 days; move older data to cold storage

These approaches reduce costs while preserving the data you actually need for analysis.

Quantifying the Impact of Log Reduction

Model savings from data reduction to build a business case. Even a 20% cut in indexed volume yields immediate monthly savings. Track those savings over time to demonstrate long-term ROI.

Answering Common Log File Analysis Questions

How Much Log Data Should I Keep?

Aim to keep logs in fast, searchable storage for at least 30 days for immediate debugging. Archive older logs to cold storage for trend analysis or compliance.

Is Real-Time Analysis Always Necessary?

No. Real-time matters for operational emergencies and security. For SEO insights like crawl behavior, daily or weekly checks are usually sufficient.

Can Log Analysis Save My Business Money?

Yes. Better crawl budget utilization and faster site performance both increase organic traffic and conversions. Reducing unnecessary log volume also cuts storage and processing costs.

Ready to turn insights into action? Use the right data and tools to quantify the impact of technical improvements, and build a clear business case with evidence. For example, you can model financial impact with tools such as Business Valuation Estimator.

Quick Q&A — Common Questions and Short Answers

Q: What exactly will I learn from server logs? A: You’ll see every request to your site — which URLs bots crawl, status codes returned, response times, and which agents are accessing your pages.

Q: How soon can I expect benefits? A: Some wins, like fixing redirect chains or removing low-value URLs from crawl paths, can improve indexing within days. Performance fixes and infrastructure changes may take longer but have measurable ROI.

Q: Do I need complex tools to start? A: No. You can begin by centralizing logs and parsing a few core fields. Dashboards and basic alerts provide large immediate value; scale up tooling as needs grow.

Ready to Build Your Own Tools for Free?

Join hundreds of businesses already using custom estimation tools to increase profits and win more clients